Autonomous helicopter landing system

2013 – 2014

This was a project I have worked on the last year of my masters and afterwards. The goal is to lower the risk in case of GPS signal loss and enable precise landing for helicopter UAVs using embedded computer vision.

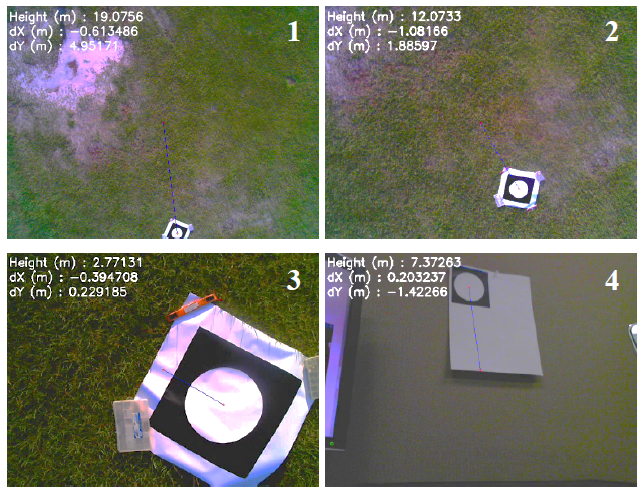

For that I designed the landmark depending on the camera field of view (FOV) and the constraint of navigating the UAV from 20 meters high to the ground. The landmark is a black board with a white circle of 50cm diameter (not too big to carry and still represents 630 pixels at 20m), the shape allows better detection as a perfect circle isn’t common in nature, and the colors were chosen to maximize contrast in order to ease thresholding and blob detection.

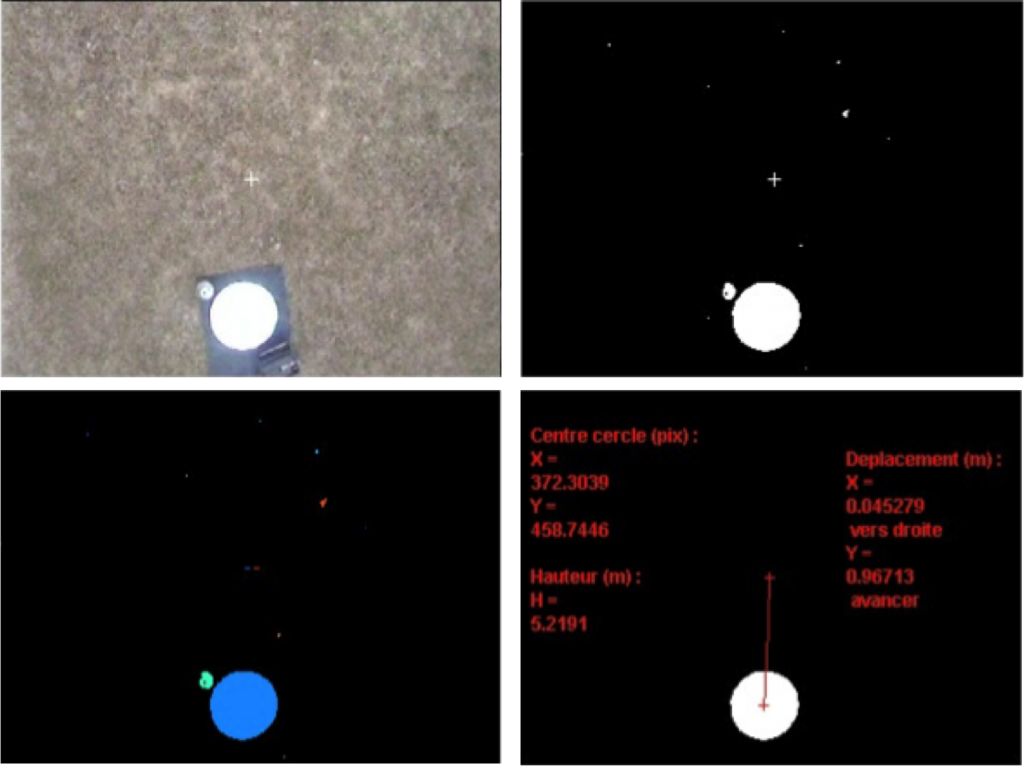

The algorithm consists in the following steps (shown in figure below):

- Thresholding the image to binary.

- Find blobs.

- Perform tests on those blobs to isolate circles.

- Compute altitude depending on camera FOV and landmark known dimensions.

- Compute distances to travel to be on top of the landmark (depending on altitude).

NB: to avoid measurements errors no morphological operations can be applied.

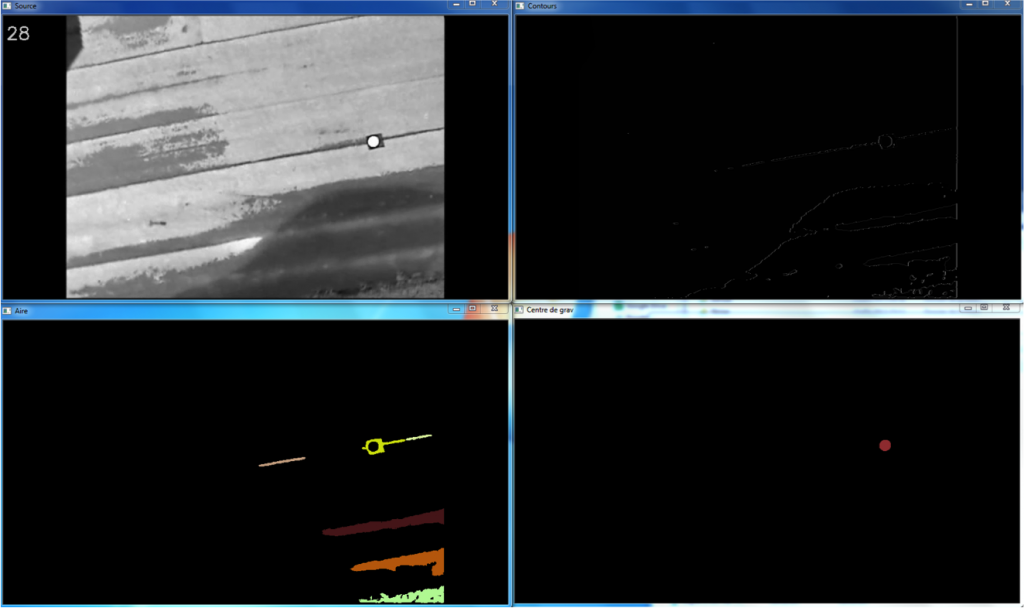

In order to embed the algorithm onboard the UAV, I converted the Matlab code in C++ using OpenCV.

Below is the result of embedded processing purposively using a low quality webcam, for robustness to noise, wobble effect and color aberrations. This was computed on Raspberry Pi, and later on Jetson TK1.